tMatchIndex

Indexes a clean and deduplicated data set in ElasticSearch for continuous

matching purposes.

component, you must have performed all the matching and deduplicating tasks on this data set:

- You generated a pairing model and computed pairs of suspect

duplicates using tMatchPairing. - You labeled a sample of the suspect pairs manually or using

Talend Data Stewardship to generate a

matching model with tMatchModel. - You predicted labels on suspect pairs based on the pairing and

matching models using tMatchPredict. - You cleaned and deduplicated the data set using tRuleSurvivorship.

Then, you do not need to restart the matching process from scratch when you get new data

records having the same schema. You can index the clean data set in ElasticSearch using

tMatchIndex for continuous matching purposes.

The tMatchIndex component supports

Elasticsearch versions up to 6.4.2 and Apache Spark from version 2.0.0.

tMatchIndex properties for Apache Spark Batch

These properties are used to configure tMatchIndex running in

the Spark Batch Job framework.

The Spark Batch

tMatchIndex component belongs to the Data Quality

family.

The component in this framework is available in all Talend Platform products with Big Data and in Talend Data Fabric.

Basic settings

|

Define a storage configuration component |

Select the configuration component to be used to provide the configuration If you leave this check box clear, the target file system is the local The configuration component to be used must be present in the same Job. |

|

Schema and Edit Schema |

A schema is a row description. It defines the number of fields Click Sync Click Edit

Read-only columns are added to the output schema:

|

|

|

Built-In: You create and store the schema locally for this component |

|

|

Repository: You have already created the schema and stored it in the |

|

ElasticSearch configuration |

Nodes: Enter the location

Index: Enter the name of the index to be created Select the Reset index check box to clean the Note that the Talend components for Spark Jobs support the |

|

Pairing |

Pairing model folder: Set the path to the folder If you want to store the model in a specific file system, for example S3 The button for browsing does not work with the Spark tHDFSConfiguration |

Advanced settings

|

Maximum ElasticSearch bulk size |

Maximum number of records for bulk indexing. tMatchIndex uses bulk mode to index data so that big It is recommended to leave the default value. If the Job execution ends |

Usage

|

Usage rule |

This component is used as an end component and requires an input link. This component, along with the Spark Batch component Palette it belongs to, |

|

Spark Batch Connection |

In the Spark

Configuration tab in the Run view, define the connection to a given Spark cluster for the whole Job. In addition, since the Job expects its dependent jar files for execution, you must specify the directory in the file system to which these jar files are transferred so that Spark can access these files:

This connection is effective on a per-Job basis. |

Indexing a reference data set in Elasticsearch

This scenario applies only to subscription-based Talend Platform products with Big Data and Talend Data Fabric.

In this Job, the tMatchIndex component creates an index in

Elasticsearch and populates it with a clean and deduplicated data set which contains a

list of education centers in Chicago.

After performing all the matching actions on the data set which contains a list of

education centers in Chicago, you do not need to restart the matching process from

scratch when you get new data records having the same schema. You can index the clean

data set in Elasticsearch using tMatchIndex for continuous

matching purposes.

-

You generated a pairing model using tMatchPairing.

You can find examples of how to generate a pairing

model on Talend Help Center (https://help.talend.com). -

Make sure the input data you want to index is clean and deduplicated.

You can find an example of how to clean and

deduplicate a data set on Talend Help Center (https://help.talend.com). -

The Elasticsearch cluster must be running Elasticsearch 5+.

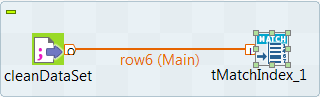

Setting up the Job

-

Drop the following components from the Palette onto the

design workspace: tFileInputDelimited and

tMatchIndex. - Connect the components using a Row > Main connection.

Selecting the Spark mode

Depending on the Spark cluster to be used, select a Spark mode for your Job.

The Spark documentation provides an exhaustive list of Spark properties and

their default values at Spark Configuration. A Spark Job designed in the Studio uses

this default configuration except for the properties you explicitly defined in the

Spark Configuration tab or the components

used in your Job.

-

Click Run to open its view and then click the

Spark Configuration tab to display its view

for configuring the Spark connection. -

Select the Use local mode check box to test your Job locally.

In the local mode, the Studio builds the Spark environment in itself on the fly in order to

run the Job in. Each processor of the local machine is used as a Spark

worker to perform the computations.In this mode, your local file system is used; therefore, deactivate the

configuration components such as tS3Configuration or

tHDFSConfiguration that provides connection

information to a remote file system, if you have placed these components

in your Job.You can launch

your Job without any further configuration. -

Clear the Use local mode check box to display the

list of the available Hadoop distributions and from this list, select

the distribution corresponding to your Spark cluster to be used.This distribution could be:-

For this distribution, Talend supports:

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Standalone

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Yarn client

-

-

For this distribution, Talend supports:

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Standalone

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Yarn cluster

-

-

Cloudera Altus

For this distribution, Talend supports:-

Yarn cluster

Your Altus cluster should run on the following Cloud

providers:-

Azure

The support for Altus on Azure is a technical

preview feature. -

AWS

-

-

As a Job relies on Avro to move data among its components, it is recommended to set your

cluster to use Kryo to handle the Avro types. This not only helps avoid

this Avro known issue but also

brings inherent preformance gains. The Spark property to be set in your

cluster is:

1spark.serializer org.apache.spark.serializer.KryoSerializerIf you cannot find the distribution corresponding to yours from this

drop-down list, this means the distribution you want to connect to is not officially

supported by

Talend

. In this situation, you can select Custom, then select the Spark

version of the cluster to be connected and click the

[+] button to display the dialog box in which you can

alternatively:-

Select Import from existing

version to import an officially supported distribution as base

and then add other required jar files which the base distribution does not

provide. -

Select Import from zip to

import the configuration zip for the custom distribution to be used. This zip

file should contain the libraries of the different Hadoop/Spark elements and the

index file of these libraries.In

Talend

Exchange, members of

Talend

community have shared some ready-for-use configuration zip files

which you can download from this Hadoop configuration

list and directly use them in your connection accordingly. However, because of

the ongoing evolution of the different Hadoop-related projects, you might not be

able to find the configuration zip corresponding to your distribution from this

list; then it is recommended to use the Import from

existing version option to take an existing distribution as base

to add the jars required by your distribution.Note that custom versions are not officially supported by

Talend

.

Talend

and its community provide you with the opportunity to connect to

custom versions from the Studio but cannot guarantee that the configuration of

whichever version you choose will be easy. As such, you should only attempt to

set up such a connection if you have sufficient Hadoop and Spark experience to

handle any issues on your own.

For a step-by-step example about how to connect to a custom

distribution and share this connection, see Hortonworks.

Configuring the connection to the file system to be used by Spark

Skip this section if you are using Google Dataproc or HDInsight, as for these two

distributions, this connection is configured in the Spark

configuration tab.

-

Double-click tHDFSConfiguration to open its Component view.

Spark uses this component to connect to the HDFS system to which the jar

files dependent on the Job are transferred. -

If you have defined the HDFS connection metadata under the Hadoop

cluster node in Repository, select

Repository from the Property

type drop-down list and then click the

[…] button to select the HDFS connection you have

defined from the Repository content wizard.For further information about setting up a reusable

HDFS connection, search for centralizing HDFS metadata on Talend Help Center

(https://help.talend.com).If you complete this step, you can skip the following steps about configuring

tHDFSConfiguration because all the required fields

should have been filled automatically. -

In the Version area, select

the Hadoop distribution you need to connect to and its version. -

In the NameNode URI field,

enter the location of the machine hosting the NameNode service of the cluster.

If you are using WebHDFS, the location should be

webhdfs://masternode:portnumber; WebHDFS with SSL is not

supported yet. -

In the Username field, enter

the authentication information used to connect to the HDFS system to be used.

Note that the user name must be the same as you have put in the Spark configuration tab.

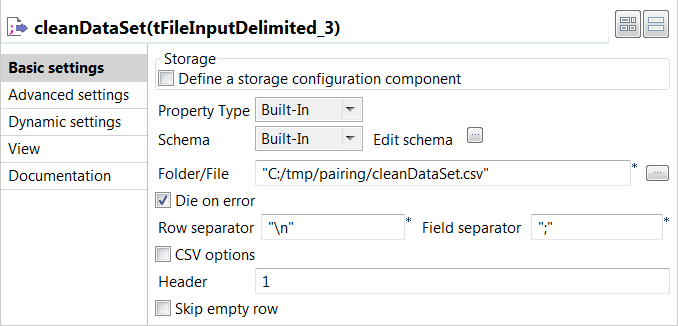

Configuring the input component

-

Double-click the tFileInputDelimited component to open

its Basic settings view and define its properties.

-

Click the […] button next to Edit

schema and use the [+] button in the

dialog box to add String type columns: Original_Id,

Source, Site_name and

Address. -

In the Folder/File field, set the path to the input

file. -

Set the row and field separators in the corresponding fields and the header and

footer, if any.

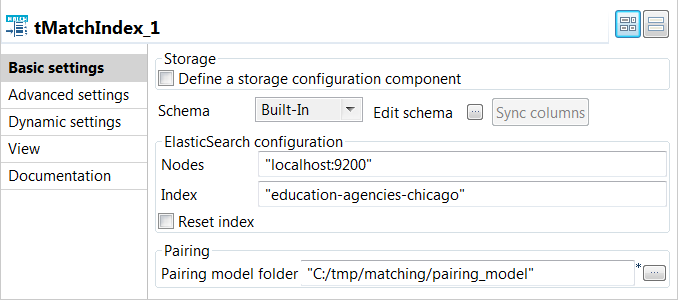

Indexing clean and deduplicated data in Elasticsearch

-

The Elasticsearch cluster and Elasticsearch-head are started before executing

the Job.For more information about Elasticsearch-head, which is a plugin for browsing

an Elasticsearch cluster, see https://mobz.github.io/elasticsearch-head/.

-

Double click the tMatchIndex component to open its

Basic settings view and define its properties.

-

In the Elasticsearch configuration area, enter the

location of the cluster hosting the Elasticsearch system to be used in the

Nodes field, for example:"localhost:9200"

-

Enter the index to be created in Elasticsearch in the

Index field, for example:education-agencies-chicago

-

If you need to clean the Elasticsearch index specified in the

Index field, select the Reset

index check box. -

Enter the path to the local folder from where you want to retrieve the pairing

model files in the Pairing model folder. -

Press F6 to save and execute the

Job.

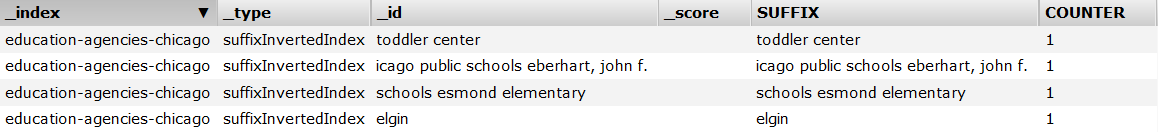

tMatchIndex created the

education-agencies-chicago index in Elasticsearch,

populated it with the clean data and computed the best suffixes based on the

blocking key values.

You can browse the index created by tMatchIndex using the

plugin Elasticsearch-head.

You can now use the indexed data as a reference data set for the

tMatchIndexPredict component.

You can find an example of how to do continuous matching

using tMatchIndexPredict on Talend Help Center (https://help.talend.com).