tRuleSurvivorship

Creates the single representation of an entity according to business rules and can

create a master copy of data for Master Data Management.

tRuleSurvivorship receives records

where duplicates, or possible duplicates, are already estimated and

grouped together. Based on user-defined business rules, it creates one

single representation for each duplicates group using the best-of-breed

data. This representation is called a “survivor”.

In local mode, Apache Spark 2.0.0, 2.1.0, 2.3.0 and 2.4.0 are supported.

tRuleSurvivorship Standard properties

These properties are used to configure tRuleSurvivorship running in the Standard Job framework.

The Standard

tRuleSurvivorship component belongs to the Data Quality family.

The component in this framework is available in Talend Data Management Platform, Talend Big Data Platform, Talend Real Time Big Data Platform, Talend Data Services Platform, Talend MDM Platform and in Talend Data Fabric.

Basic settings

|

Schema and Edit |

A schema is a row description. It defines the number of fields This component provides two read-only columns:

When a survivor record is created, the |

|

|

Built-In: You create and store the schema locally for this component |

|

|

Repository: You have already created the schema and stored it in the |

|

Group identifier |

Select the column whose content indicates the required group identifiers from the input |

|

Group size |

Select the column whose content indicates the required group size |

|

Rule package name |

Type in the name of the rule package you want to create with this component. |

|

Generate rules and survivorship flow |

Once you have defined all of the rules of a rule package or modified some of them with icon to generate this rule package into the Survivorship Rules node of Rules Management Note:

This step is necessary to validate these changes and take them into account at Warning: In a rule package, two rules cannot use the same

name. |

|

Rule table |

Complete this table to create a complete survivor validation flow. Basically, each given

Order: From the list, select the execution order of the

rules you are creating so as to define a survivor validation flow. The types of order may be:

Rule Name: Type in the name of each rule you are

Reference column: Select the column you need to apply a

Function: Select the type of validation operation to be

performed on a given Reference column. The available types include:

Value: enter the expression of interest corresponding to

Target column: when a step is executed, it validates a

Ignore blanks: Select the check boxes which correspond to |

|

Define conflict rule |

Select this check box to be able to create rules to resolve |

|

Conflict rule table |

Complete this table to create rules to resolve conflicts. The

Rule name: Type in the name of each rule you are

Conflicting column:When a step is

Function: Select the type of

validation operation to be performed on a given Conflicting column. The available types include those in the Rule table and the following ones:

Value: enter the expression of interest corresponding to

Reference column: Select the column you need to

Ignore blanks: Select the check boxes which correspond to

Disable: Select the check box to disable |

Advanced settings

|

tStatCatcher Statistics |

Select this check box to gather the Job processing metadata at the Job level |

Global Variables

|

Global Variables |

ERROR_MESSAGE: the error message generated by the A Flow variable functions during the execution of a component while an After variable To fill up a field or expression with a variable, press Ctrl + For further information about variables, see |

Usage

|

Usage rule |

This component is usually used as an intermediate component, and it requires an As it needs grouped data to process, this component works It also requires that the input data are sorted by the group When you export a Job using tRuleSurvivorship, you need to select the Export dependencies check box in order to export |

Selecting the best-of-breed data from a group of duplicates to create a

survivor

This scenario applies only to Talend Data Management Platform, Talend Big Data Platform, Talend Real Time Big Data Platform, Talend Data Services Platform, Talend MDM Platform and Talend Data Fabric.

The Job in this scenario groups the duplicate data and create one single representation of

these duplicates. This representation is the “survivor” at the end of the selection process

and you can use this survivor, for example, to create a master copy of data for MDM.

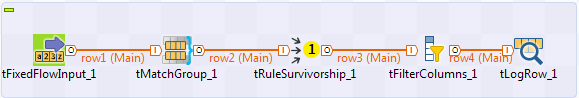

The components used in this Job are:

-

tFixedFlowInput: it provides the input data

to be processed by this Job. In the real-world use case, you may use another

input component of interest to replace tFixedFlowInput for providing the required data. -

tMatchGroup: it groups the duplicates of the

input data and gives each group the information about its group ID and group

size. The technical names of the information are GID and GRP_SIZE respectively

and they are required by tRuleSurvivorship. -

tRuleSurvivorship: it creates the

user-defined survivor validation flow to select the best-of-breed data that

composes the single representation of each duplicates group. -

tFilterColumns: it rules out the technical

columns and outputs the columns that carry the actual information of interest. -

tLogRow: it presents the result of the Job

execution.

Dropping and linking the components

-

Drop tFixedFlowInput, tMatchGroup, tRuleSurvivorship, tFilterColumns and tLogRow

from Palette onto the Design

workspace. -

Right-click tFixedFlowInput to open its

contextual menu and select the Row >

Main link from this menu to connect

this component to tMatchGroup. -

Do the same to create the Main link from

tMatchGroup to tRuleSurvivorship, then to tFilterColumns and to tLogRow.

Configuring the process of grouping the input data

Setting up the input records

-

Double-click tFixedFlowInput to open its

Component view.

-

Click the three-dot button next to Edit

schema to open the schema editor.

-

Click the plus button nine times to add nine rows and rename these rows

respectively. In this example, they are: acctName, addr, city, state,

zip, country, phone, data, credibility. They are the nine columns of the schema of the

input data. -

In the Type column, select the data types

for the rows of interest. In this example, select Date for the data column

and Double for the credibility column.Note:Be aware of setting the proper data type so that later you are able to

define the validation rules easily. -

In the Date Pattern column, type in the

data pattern to reflect the date format of interest. In this scenario, this

format is yyyyMMdd. -

Click OK to validate these changes and

accept the propagation prompted by the pop-up dialog box. -

In the Mode area of the Basic settings view, select Use Inline Content (delimited file) to enter the input data

of interest. -

In the Content field, enter the input

data to be processed. This data should correspond to the schema you have

defined and in this example, the contents of the data are:1234GRIZZARD CO.;110 N MARYLAND AVE;GLENDALE;CA;912066;FR;8185431314;20110101;5GRIZZARD;110 NORTH MARYLAND AVENUE;GLENDALE;CA;912066;US;9003254892;20110118;4GRIZZARD INC;110 N. MARYLAND AVENUE;GLENDALE;CA;91206;US;(818) 543-1315;20110103;2GRIZZARD CO;1480 S COLORADO BOULEVARD;LOS ANGELES;CA;91206;US;(800) 325-4892;20110115;1

Grouping the duplicate records

-

Right-click tMatchGroup to open its

contextual menu and select Configuration

Wizard.From the wizard, you can see how your groups look like and you can adjust

the component settings in order to correctly get the similar matches.

-

Click the plus button under the Key

Definition table to add one row. -

In the Input Key Attribute column of this

row, select acctName. This way, this

column becomes the reference used to match the duplicates of the input data.

-

In the Matching Function column, select

the Jaro-Winkler matching algorithm. -

In the Match threshold field, enter the

numerical value to indicate at which value two record fields match each

other. In this example, type in 0.6. -

Click Chart to execute this matching rule

and show the result in this wizard.If the input records are not put into one single group, replace 0.6 with a smaller value and click Chart again to check the result until all of the

four records are in the same group.The Job in this scenario puts four similar records into one single

duplicates group so that tRuleSurvivorship

is able to create one survivor from them. This simple sample allows you to

have a clear picture about how tRuleSurvivorship works along with other components to

create the best data. However, in the real-world case, you may need to

process much more data with complex duplicate situation and thus put the

data into much more groups. -

Click OK to close this Configuration wizard and the Basic settings view of the tMatchGroup component is automatically filled with the

parameters you have set.For further information about the Configuration

wizard, see Configuration wizard

Defining the survivor validation flow

Having configured and grouped the input data, you need to create the survivor

validation flow using tRuleSurvivorship. To do

this, proceed as follows:

-

Double-click tRuleSurvivorship to open

its Component view.

-

Select GID for the Group identifier field and GRP_SIZE for the Group

size field. -

In the Rule package name field, enter the

name of the rule package you need to create to define the survivor

validation flow of interest. In this example, this name is org.talend.survivorship.sample. -

In the Rule table, click the plus button to

add as many rows as required and complete them using the corresponding rule

definitions. In this example, add ten rows and complete them using the table

below:Order

Rule name

Reference column

Function

Value

Target column

Sequential

"1_LengthAcct"acctName

Expression

".length >11"acctName

Sequential

"2_LongestAddr"addr

Longest

n/a

addr

Sequential

"3_HighCredibility"credibility

Expression

"> 3"credibility

Sequential

"4_MostCommonCity"city

Most common

n/a

city

Sequential

"5_MostCommonZip"zip

Most common

n/a

zip

Multi-condition

n/a

zip

Match regex

"\d{5}"n/a

Multi-target

n/a

n/a

n/a

n/a

state

Multi-target

n/a

n/a

n/a

n/a

country

Sequential

"6_LatestPhone"date

Most recent

n/a

phone

Multi-target

n/a

n/a

n/a

n/a

date

Do not use special characters in rule names, otherwise the Job may not run

correctly.These rules are executed in the top-down order. The Multi-condition rule is one of the conditions of the 5_MostCommonZip rule, so the rule-compliant zip

code should be the most common zip code and meanwhile have five digits. The

zip column is the target column of the

5_MostCommonZip rule and the two

Multi-target rules below it add another two

target columns, state and country, so the zip, the state and the

country columns will be the source of the

best-of-breed data. Thus once a zip code is validated, the corresponding record

field values from these three columns will be selected.The same is true to the Sequential rule

6_LatestPhone. Once a date value is

validated, the corresponding record field values will be selected from the

phone and the date columns.Note:In this table, the fields reading n/a

indicate that these fields are not available to the corresponding Order types or Function types you have selected. In the Rule table of the Basic

settings view of tRuleSurvivorship, these unavailable fields are greyed out.

For further information about this rule table, see the properties table at

the beginning of this tRuleSurvivorShip

section. -

Next to Generate rules and survivorship

flow, click the

icon to generate the rule package with its contents you

have defined.Once done, you can find the generated rule package in the Metadata > Rules Management > Survivorship Rules directory of your Studio Repository. From there, you are able to open the newly created

survivor validation flow of this example and read its diagram. For further

information, see

Talend Studio

User

Guide.

Selecting the columns of interest

The schema of tRuleSurvivorship includes several

technical columns like GID, GRP_SIZE, which are not interesting in this example, so you may need

to use tFilterColumns to rule these technical

columns out and leave the columns carrying actual data to be output. To do this,

proceed as follows:

-

Double-click tFilterColumns to open its

Component view. -

Click Sync columns to retrieve the schema

from its preceding component. If a dialog box pops up to prompt the

propagation, click Yes to accept it. -

Click the three-dot button next to Edit

schema to open the schema editor. -

On the tFilterColumns side of this

editor, select the GID, GRP_SIZE, MASTER

and SCORE columns and click the red cross

icon below to remove them.

-

Click OK to validate these changes and

accept the propagation prompted by the pop-up dialog box.

Executing the Job

The tLogRow component is used to present the

execution result of the Job. You can configure the presentation mode on its

Component view.

To do this, double-click tLogRow to open the

Component view and in the Mode area, select the Table (print values in

cells of a table) check box.

To execute this Job, press F6.

Once done, the Run view is opened automatically,

where you can check the execution result.

You can read that the last row is the survivor record because its SURVIVOR column indicates true. This record is composed of the best-of-breed data of each

column from the four other rows which are the duplicates of the same group.

The CONFLICT column presents the columns carrying

more than one record field values compliant with the given validation rules. Take

the credibility column for example: apart from

the survivor record whose credibility is 5.0, the

CONFLICT column indicates that the credibility

of the second record GRIZZARD is 4.0, also bigger than 3, the threshold set in the rules you have defined, however, as the

credibility 5.0 appears in the first record

GRIZZARD CO., tRuleSurvivorship selects it as best-of-breed data.

Modifying the rule file manually to code the conditions you want to use

to create a survivor

This scenario applies only to Talend Data Management Platform, Talend Big Data Platform, Talend Real Time Big Data Platform, Talend Data Services Platform, Talend MDM Platform and Talend Data Fabric.

In a Job, the tRuleSurvivorship component generates a

survivorship rule package based on the conditions you define in the Rule table in the component Basic

settings view.

If you want the rule to survive records based on some more advanced criteria, you must

manually code the conditions in the rule using the Drools language.

The Job in this scenario gives an example about how to modify the code in the rule

generated by the component to use specific conditions to create a survivor. Later, you

can use this survivor, for example, to create a master copy of data for MDM.

The components used in this Job are:

-

tFixedFlowInput: it provides the input data

to be processed by this Job. -

tRuleSurvivorship: it creates the survivor

validation flow based on the conditions you code in the rule. This component

selects the best-of-breed data that composes the single representation of each

duplicate group. -

tLogRow: it shows the result of the Job

execution.

Dropping the components and linking them together

-

Drop tFixedFlowInput, tRuleSurvivorship and tLogRow from the palette of the studio onto the Design

workspace. -

Right-click tFixedFlowInput and select

the Row > Main link to connect this component to tRuleSurvivorship. -

Do the same to connect tRuleSurvivorship

to tLogRow using the Row > Main link.

Setting up the input records

-

Double-click tFixedFlowInput to open its

Component view.

-

Click the three-dot button next to Edit

schema to open the schema editor.

-

Click the plus button and add five rows.

Rename these rows respectively as the following: Record_ID, File,

Acctname, GRP_ID and GRP_SIZE.The input data has information about group ID and group size. In real life

scenario, such information can be gathered by the tMatchGroup component as shown in scenario 1. tMatchGroup groups duplicates in the input data

and gives each group a group ID and a group size. These two columns are

required by tRuleSurvivorship. -

In the Type column, select the data types

for your columns. In this example, set the type to Integer for Record_ID and

GRP_SIZE, and set it to String for the other columns.Note:Make sure to set the proper data type so that you can define the

validation rules without error messages. -

Click OK to validate these changes and

accept the propagation when prompted by the pop-up dialog box. - In the Mode area of the Basic settings view, select Use Inline Content (delimited file).

-

In the Content field, enter the input

data to be processed.This data should correspond to the schema you have defined. In this

example, the input data is as the following:1234561;2;AcmeFromFile2;1;22;1;AcmeFromFile1;1;03;1;AAA;2;14;2;BBB;3;15;1; ;4;26;2;NotNull;4;0 - Set the row and field separators in the corresponding fields.

Defining the survivor validation flow

-

Double-click tRuleSurvivorship to open

its Component view.

-

Select GRP_ID from the Group Identifier list and GRP_SIZE from the Group

size list. -

In the Rule package name field, replace

the by-default name org.talend.survivorship.sample with a name of your choice,

if needed.The survivor validation flow will be generated and saved under this name

in the Repository tree view of the

Integration

perspective. -

In the Rule table, click the plus button

to add a row per rule.In this example, define one rule and complete it as the following:Order

Rule name

Reference column

Function

Value

Target column

Sequential

“Rule1”

File

Expression

.equals("1")Acctname

One rule, “Rule1”, will be generated and executed by

tRuleSurvivorship. This rule validates

the records in the File column that

comply with the expression you enter in the Value column of the Rule

table. The component will then select the corresponding value

as the best breed from the Acctname

target column. -

Next to Generate rules and survivorship

flow, click the

icon to generate the rule package according to the

conditions you have defined.The rule package is generated and saved under Metadata > Rules Management > Survivorship Rules in the Repository tree

view of the

Integration

perspective. -

In the Repository tree view, browse to

the rule file under the Survivorship Rules

folder and double-click “Rule1” to open it. But this rule will select the values that come from file 1. However, you

But this rule will select the values that come from file 1. However, you

may also want to survive records based on specific criteria; for example, if

Acctname has a value in file1, you

may want to use that value, or else use the value from file2 instead. To do

this, you must modify the code manually in the rule file. -

Modify the rule with the following Drools code:

123456789101112131415161718192021222324252627package org.talend.survivorship.samplerule "ExistInFile1"no-loop truedialect "mvel"ruleflow-group "Rule1Group"when$input : RecordIn( file.equals("1"), acctname!= null, !acctname.trim().equals("") )thenSystem.out.println("ExistInFile1 fired " + $input.record_id);dataset.survive( $input.TALEND_INTERNAL_ID, "Acctname" );dataset.survive( $input.TALEND_INTERNAL_ID, "File" );endrule "NotExistFile1"no-loop truedialect "mvel"ruleflow-group "Rule1Group"when$input : RecordIn( file.equals("2"), acctname!= null && !acctname.trim().equals("") )(not (exists (RecordIn( file.equals("1") )))or exists( RecordIn( file.equals("1"), acctname== null || acctname.trim().equals("") ) ))thenSystem.out.println("NotExistFile1 fired " + $input.record_id);dataset.survive( $input.TALEND_INTERNAL_ID, "Acctname" );dataset.survive( $input.TALEND_INTERNAL_ID, "File" );endWarning:

After you modify the rule file, you must not click the

icon. Otherwise, your modifications will be

replaced by the new generation of the rule package.

Executing the Job

-

Double-click tLogRow to open the

Component view and in the Mode area, select the Table

(print values in cells of a table) option.The execution result of the Job will be printed in a table. -

Press F6 to execute the Job.

The Run view is opened automatically

showing the execution results. You can read that four rows are the survivor records because their

You can read that four rows are the survivor records because their

SURVIVOR column indicates true. In the survivor records, the

Acctname value is selected from file 1, if the

value exists. If not, the value is selected from file 2, as you defined in

the rule. Other rows are the duplicates of same groups.The CONFLICT column shows that no column

has more than one value compliant with the given validation rules.

tRuleSurvivorship properties for Apache Spark Batch

These properties are used to configure tRuleSurvivorship running in the Spark Batch Job framework.

The Spark Batch

tRuleSurvivorship component belongs to the Data Quality family.

The component in this framework is available in all Talend Platform products with Big Data and in Talend Data Fabric.

Basic settings

|

Schema and Edit |

A schema is a row description. It defines the number of fields This component provides two read-only columns:

When a survivor record is created, the |

|

|

Built-In: You create and store the schema locally for this component |

|

|

Repository: You have already created the schema and stored it in the |

|

Group identifier |

Select the column whose content indicates the required group identifiers from the input |

|

Rule package name |

Type in the name of the rule package you want to create with this component. |

|

Generate rules and survivorship flow |

Once you have defined all of the rules of a rule package or modified some of them with icon to generate this rule package into the Survivorship Rules node of Rules Management Note:

This step is necessary to validate these changes and take them into account at Warning: In a rule package, two rules cannot use the same

name. |

|

Rule table |

Complete this table to create a complete survivor validation flow. Basically, each given

Order: From the list, select the execution order of the

rules you are creating so as to define a survivor validation flow. The types of order may be:

Rule Name: Type in the name of each rule you are

Reference column: Select the column you need to apply a

Function: Select the type of validation operation to be

performed on a given Reference column. The available types include:

Value: enter the expression of interest corresponding to

Target column: when a step is executed, it validates a

Ignore blanks: Select the check boxes which correspond to |

|

Define conflict rule |

Select this check box to be able to create rules to resolve |

|

Conflict rule table |

Complete this table to create rules to resolve conflicts. The

Rule name: Type in the name of each rule you are

Conflicting column:When a step is

Function: Select the type of

validation operation to be performed on a given Conflicting column. The available types include those in the Rule table and the following ones:

Value: enter the expression of interest corresponding to

Reference column: Select the column you need to

Ignore blanks: Select the check boxes which correspond to

Disable: Select the check box to disable |

Advanced settings

|

Set the number of partitions by |

Enter the number of partitions you want to split each group into. |

Global Variables

|

Global Variables |

ERROR_MESSAGE: the error message generated by the A Flow variable functions during the execution of a component while an After variable To fill up a field or expression with a variable, press Ctrl + For further information about variables, see |

Usage

|

Usage rule |

This component is used as an intermediate step. This component, along with the Spark Batch component Palette it belongs to, Note that in this documentation, unless otherwise explicitly stated, a |

|

Spark Connection |

In the Spark

Configuration tab in the Run view, define the connection to a given Spark cluster for the whole Job. In addition, since the Job expects its dependent jar files for execution, you must specify the directory in the file system to which these jar files are transferred so that Spark can access these files:

This connection is effective on a per-Job basis. |

Creating a clean data set from the suspect pairs labeled by tMatchPredict and the

unique rows computed by tMatchPairing

This scenario applies only to subscription-based Talend Platform products with Big Data and Talend Data Fabric.

-

The suspect records labeled as duplicates and grouped by

tMatchPredict.You can find an example of how to label suspect

pairs with assigned labels on Talend Help Center (https://help.talend.com). -

The unique rows computed by tMatchPairing.

You can find examples of how to compute unique rows

from source data on Talend Help Center (https://help.talend.com).

-

In the first subJob, tRuleSurvivorship processes the

records labeled as duplicates and grouped by

tMatchPredict, to create one single

representation of each duplicates group. -

In the second subJob, tUnite merges the survivors and

the unique rows to create a clean and deduplicated data set to be used with

the tMatchIndex component.

The output file contains clean and deduplicated data. You can index this reference data

set in ElasticSearch using the tMatchIndex component.

Setting up the Job

-

Set up the first subJob:

-

Drop the following components from the Palette onto the design

workspace: tFileInputDelimited, two

tFilterRow components and

tFileOutputDelimited.Use the Main link to connect the

components. -

Connect tFileInputDelimited to the first

tFilterRow component. -

Connect the first tFilterRow component to

tRuleSurvivorship. -

Connect tRuleSurvivorship to the second

tFilterRow component. -

Connect the second tFilterRow component to

tFileOutputDelimited.

-

Drop the following components from the Palette onto the design

-

Set up the second subJob:

-

Drop the following components from the Palette onto the design

workspace: two tFileInputDelimited components,

tFilterColumns, tUnite

and tFileOutputDelimited.Use the Main link to connect the

components. -

Connect the first tFileInputDelimited component

to tFilterColumn. -

Connect tFilterColumn to

tUnite. -

Connect the second tFileInputDelimited component

to tUnite. -

Connect tUnite to

tFileOutputDelimited.

-

Drop the following components from the Palette onto the design

-

Connect the tFileInputDelimited from the first subJob to

the tFileInputDelimited from the second subJob using a Trigger > OnSubjobOk link.

Selecting the Spark mode

Depending on the Spark cluster to be used, select a Spark mode for your Job.

The Spark documentation provides an exhaustive list of Spark properties and

their default values at Spark Configuration. A Spark Job designed in the Studio uses

this default configuration except for the properties you explicitly defined in the

Spark Configuration tab or the components

used in your Job.

-

Click Run to open its view and then click the

Spark Configuration tab to display its view

for configuring the Spark connection. -

Select the Use local mode check box to test your Job locally.

In the local mode, the Studio builds the Spark environment in itself on the fly in order to

run the Job in. Each processor of the local machine is used as a Spark

worker to perform the computations.In this mode, your local file system is used; therefore, deactivate the

configuration components such as tS3Configuration or

tHDFSConfiguration that provides connection

information to a remote file system, if you have placed these components

in your Job.You can launch

your Job without any further configuration. -

Clear the Use local mode check box to display the

list of the available Hadoop distributions and from this list, select

the distribution corresponding to your Spark cluster to be used.This distribution could be:-

For this distribution, Talend supports:

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Standalone

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Yarn client

-

-

For this distribution, Talend supports:

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Standalone

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Yarn cluster

-

-

Cloudera Altus

For this distribution, Talend supports:-

Yarn cluster

Your Altus cluster should run on the following Cloud

providers:-

Azure

The support for Altus on Azure is a technical

preview feature. -

AWS

-

-

As a Job relies on Avro to move data among its components, it is recommended to set your

cluster to use Kryo to handle the Avro types. This not only helps avoid

this Avro known issue but also

brings inherent preformance gains. The Spark property to be set in your

cluster is:

1spark.serializer org.apache.spark.serializer.KryoSerializerIf you cannot find the distribution corresponding to yours from this

drop-down list, this means the distribution you want to connect to is not officially

supported by

Talend

. In this situation, you can select Custom, then select the Spark

version of the cluster to be connected and click the

[+] button to display the dialog box in which you can

alternatively:-

Select Import from existing

version to import an officially supported distribution as base

and then add other required jar files which the base distribution does not

provide. -

Select Import from zip to

import the configuration zip for the custom distribution to be used. This zip

file should contain the libraries of the different Hadoop/Spark elements and the

index file of these libraries.In

Talend

Exchange, members of

Talend

community have shared some ready-for-use configuration zip files

which you can download from this Hadoop configuration

list and directly use them in your connection accordingly. However, because of

the ongoing evolution of the different Hadoop-related projects, you might not be

able to find the configuration zip corresponding to your distribution from this

list; then it is recommended to use the Import from

existing version option to take an existing distribution as base

to add the jars required by your distribution.Note that custom versions are not officially supported by

Talend

.

Talend

and its community provide you with the opportunity to connect to

custom versions from the Studio but cannot guarantee that the configuration of

whichever version you choose will be easy. As such, you should only attempt to

set up such a connection if you have sufficient Hadoop and Spark experience to

handle any issues on your own.

For a step-by-step example about how to connect to a custom

distribution and share this connection, see Hortonworks.

Configuring the connection to the file system to be used by Spark

Skip this section if you are using Google Dataproc or HDInsight, as for these two

distributions, this connection is configured in the Spark

configuration tab.

-

Double-click tHDFSConfiguration to open its Component view.

Spark uses this component to connect to the HDFS system to which the jar

files dependent on the Job are transferred. -

If you have defined the HDFS connection metadata under the Hadoop

cluster node in Repository, select

Repository from the Property

type drop-down list and then click the

[…] button to select the HDFS connection you have

defined from the Repository content wizard.For further information about setting up a reusable

HDFS connection, search for centralizing HDFS metadata on Talend Help Center

(https://help.talend.com).If you complete this step, you can skip the following steps about configuring

tHDFSConfiguration because all the required fields

should have been filled automatically. -

In the Version area, select

the Hadoop distribution you need to connect to and its version. -

In the NameNode URI field,

enter the location of the machine hosting the NameNode service of the cluster.

If you are using WebHDFS, the location should be

webhdfs://masternode:portnumber; WebHDFS with SSL is not

supported yet. -

In the Username field, enter

the authentication information used to connect to the HDFS system to be used.

Note that the user name must be the same as you have put in the Spark configuration tab.

Creating survivors from the suspect pairs labeled by tMatchPredict

Configuring the input component

-

Double-click tFileInputDelimited to open its

Basic settings view.The input data must be the suspect pairs labeled and grouped by the

tMatchPredict component. -

Click the […] button next to Edit

schema and use the [+] button in the

dialog box to add columns.The input schema must be the same as the suspect pairs outputted by the

tMatchPredict component. -

Click OK in the dialog box and accept to propagate the

changes when prompted. -

In the Folder/File field, set the path to the input

file. -

Set the row and field separators in the corresponding fields and the header and

footer, if any.

Selecting the Spark mode

Depending on the Spark cluster to be used, select a Spark mode for your Job.

The Spark documentation provides an exhaustive list of Spark properties and

their default values at Spark Configuration. A Spark Job designed in the Studio uses

this default configuration except for the properties you explicitly defined in the

Spark Configuration tab or the components

used in your Job.

-

Click Run to open its view and then click the

Spark Configuration tab to display its view

for configuring the Spark connection. -

Select the Use local mode check box to test your Job locally.

In the local mode, the Studio builds the Spark environment in itself on the fly in order to

run the Job in. Each processor of the local machine is used as a Spark

worker to perform the computations.In this mode, your local file system is used; therefore, deactivate the

configuration components such as tS3Configuration or

tHDFSConfiguration that provides connection

information to a remote file system, if you have placed these components

in your Job.You can launch

your Job without any further configuration. -

Clear the Use local mode check box to display the

list of the available Hadoop distributions and from this list, select

the distribution corresponding to your Spark cluster to be used.This distribution could be:-

For this distribution, Talend supports:

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Standalone

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Yarn client

-

-

For this distribution, Talend supports:

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Standalone

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Yarn cluster

-

-

Cloudera Altus

For this distribution, Talend supports:-

Yarn cluster

Your Altus cluster should run on the following Cloud

providers:-

Azure

The support for Altus on Azure is a technical

preview feature. -

AWS

-

-

As a Job relies on Avro to move data among its components, it is recommended to set your

cluster to use Kryo to handle the Avro types. This not only helps avoid

this Avro known issue but also

brings inherent preformance gains. The Spark property to be set in your

cluster is:

1spark.serializer org.apache.spark.serializer.KryoSerializerIf you cannot find the distribution corresponding to yours from this

drop-down list, this means the distribution you want to connect to is not officially

supported by

Talend

. In this situation, you can select Custom, then select the Spark

version of the cluster to be connected and click the

[+] button to display the dialog box in which you can

alternatively:-

Select Import from existing

version to import an officially supported distribution as base

and then add other required jar files which the base distribution does not

provide. -

Select Import from zip to

import the configuration zip for the custom distribution to be used. This zip

file should contain the libraries of the different Hadoop/Spark elements and the

index file of these libraries.In

Talend

Exchange, members of

Talend

community have shared some ready-for-use configuration zip files

which you can download from this Hadoop configuration

list and directly use them in your connection accordingly. However, because of

the ongoing evolution of the different Hadoop-related projects, you might not be

able to find the configuration zip corresponding to your distribution from this

list; then it is recommended to use the Import from

existing version option to take an existing distribution as base

to add the jars required by your distribution.Note that custom versions are not officially supported by

Talend

.

Talend

and its community provide you with the opportunity to connect to

custom versions from the Studio but cannot guarantee that the configuration of

whichever version you choose will be easy. As such, you should only attempt to

set up such a connection if you have sufficient Hadoop and Spark experience to

handle any issues on your own.

For a step-by-step example about how to connect to a custom

distribution and share this connection, see Hortonworks.

Configuring the connection to the file system to be used by Spark

Skip this section if you are using Google Dataproc or HDInsight, as for these two

distributions, this connection is configured in the Spark

configuration tab.

-

Double-click tHDFSConfiguration to open its Component view.

Spark uses this component to connect to the HDFS system to which the jar

files dependent on the Job are transferred. -

If you have defined the HDFS connection metadata under the Hadoop

cluster node in Repository, select

Repository from the Property

type drop-down list and then click the

[…] button to select the HDFS connection you have

defined from the Repository content wizard.For further information about setting up a reusable

HDFS connection, search for centralizing HDFS metadata on Talend Help Center

(https://help.talend.com).If you complete this step, you can skip the following steps about configuring

tHDFSConfiguration because all the required fields

should have been filled automatically. -

In the Version area, select

the Hadoop distribution you need to connect to and its version. -

In the NameNode URI field,

enter the location of the machine hosting the NameNode service of the cluster.

If you are using WebHDFS, the location should be

webhdfs://masternode:portnumber; WebHDFS with SSL is not

supported yet. -

In the Username field, enter

the authentication information used to connect to the HDFS system to be used.

Note that the user name must be the same as you have put in the Spark configuration tab.

Configuring the filtering process to keep only the suspect pairs labeled as

duplicates

-

Double-click the first tFilterRow component to open its

Basic settings view. -

Click the Sync columns button to retrieve the schema

from the previous component. -

In the Conditions table, add a

condition and fill in the filtering parameters:-

From the Input Column list, select the column

which holds the labels set on the records, LABEL

in this example. -

From the Function list, select

Empty. -

From the Operator list, select =

=. -

From the Value list, set the label used to

identify duplicates, "YES" in this example.

-

From the Input Column list, select the column

Defining the survivor validation flow

-

Double-click tRuleSurvivorship to open its

Basic settings view.

-

Click the Sync columns button to retrieve the schema

from the previous component. -

From the list, select the column to be used as a Group

Identifier. -

In the Rule package name field, enter the name of the

rule package you need to create to define the survivor validation flow of

interest, org.talend.survivorship.sample in this

example. -

In the Rule table, click the [+]

button to add as many rows as required and complete them using the corresponding

rule definitions. -

Next to Generate rules and

survivorship flow, click the icon to generate the rule package with its contents you

icon to generate the rule package with its contents you

have defined.You can find the generated rule package in the Metadata > Rules Management > Survivorship Rules directory of Talend Studio

Repository. From there, you can open the survivor

validation flow created in this example and read its diagram.

Configuring the filtering process to keep only the survivors

-

Double-click tFilterRow to open its Basic

settings view. -

Click the Sync columns button to retrieve the schema

from the previous component. -

In the Conditions table, add a condition

and fill in the filtering parameters:-

From the Input Column list, select the

SURVIVOR column. -

From the Function list, select

Empty. -

From the Operator list, select =

=. -

From the Value list, enter

"true".

-

From the Input Column list, select the

-

From the Action list, select the operation for writing

data:-

Select Create when you run the Job for the first

time. -

Select Overwrite to replace the file every time

you run the Job.

-

- Set the row and field separators in the corresponding fields.

Configuring the output component for the survivors

-

Double-click the first tFileOutputDelimited component to

display the Basic settings view and define the component

properties.You have already accepted to propagate the schema to the output components

when you defined the input component. -

Clear the Define a storage configuration component check

box to use the local system as your target file system. -

In the Folder field, set the path to the folder which

will hold the output data.

Merging the survivors with the unique rows

Configuring the input components

-

Double-click the first tFileInputDelimited to open its

Basic settings view.The input data must be the survivors from the first subJob of this

scenario. -

Click the […] button next to Edit

schema and use the [+] button in the

dialog box to add columns.The input schema must be the same as the survivors outputted in the first

subJob. -

Click OK in the dialog box and accept to propagate the

changes when prompted. -

In the Folder/File field, set the path to the input

files. -

Set the row and field separators in the corresponding fields and the header and

footer, if any. -

Double-click the second tFileInputDelimited to open its

Basic settings view and define its properties.The input data must be the unique rows outputted by the

tMatchPairing.The input schema must be the same as the survivors outputted in the first

subJob.

Configuring the filtering process to remove unwanted columns

-

Double-click tFilterColumns to open its Basic

settings view. -

Click the […] button next to Edit

schema. -

In the dialog box, select the unwanted columns and click

[x] to remove from the output schema.In this example, keep the columns corresponding to the list of education

centers: Original_Id, Source,

Site_name and Address.

Configuring the merging process

-

Double-click tUnite to open its Basic

settings view. -

Click […] next to Edit schema

to check that the output schema corresponds to the schema from the input

tFileInputDelimited components. -

Double-click the first tFileOutputDelimited component to

display the Basic settings view and define the component

properties.You have already accepted to propagate the schema to the output components

when you defined the input component. -

Clear the Define a storage configuration component check

box to use the local system as your target file system. -

In the Folder field, set the path to the folder which

will hold the output data. -

From the Action list, select the operation for writing

data:-

Select Create when you run the Job for the first

time. -

Select Overwrite to replace the file every time

you run the Job.

-

- Set the row and field separators in the corresponding fields.

-

Select the Merge results to single file check box, and

in the Merge file path field set the path where to output

the file of the clean and deduplicated data set.

Executing the Job

A single representation for each duplicates group is created and merged with the

unique rows in a single file.

The data set is now clean and deduplicated.

You can use tMatchIndex to index this reference data set in

Elasticsearch for continuous matching purposes.

You can find an example of how to index a reference data set

in Elasticsearch on Talend Help Center (https://help.talend.com).

Converting the Standard Job to a Spark Batch Job

Procedure

-

In the Repository tree view of the

Integration

perspective of

Talend Studio

,

right-click the Job you have created in the earlier scenario to open its contextual

menu and select Edit properties.Then the Edit properties dialog box is

displayed. The Job must be closed before you are able to make any changes in this

dialog box.Note that you can change the Job name as well as the other descriptive information

about the Job from this dialog box. - From the Job Type list, select Big Data Batch.

-

From the Framework list, select Spark. Then a Spark Job using the same name appears under the

Big Data Batch sub-node of the Job Design node.

If you need to create this Spark Job from scratch, you have to right-click the Job Design node or the Big Data

Batch sub-node and select Create Big Data Batch

Job from the contextual menu. Then an empty Job is opened in the

workspace.

Selecting the Spark mode

Depending on the Spark cluster to be used, select a Spark mode for your Job.

The Spark documentation provides an exhaustive list of Spark properties and

their default values at Spark Configuration. A Spark Job designed in the Studio uses

this default configuration except for the properties you explicitly defined in the

Spark Configuration tab or the components

used in your Job.

-

Click Run to open its view and then click the

Spark Configuration tab to display its view

for configuring the Spark connection. -

Select the Use local mode check box to test your Job locally.

In the local mode, the Studio builds the Spark environment in itself on the fly in order to

run the Job in. Each processor of the local machine is used as a Spark

worker to perform the computations.In this mode, your local file system is used; therefore, deactivate the

configuration components such as tS3Configuration or

tHDFSConfiguration that provides connection

information to a remote file system, if you have placed these components

in your Job.You can launch

your Job without any further configuration. -

Clear the Use local mode check box to display the

list of the available Hadoop distributions and from this list, select

the distribution corresponding to your Spark cluster to be used.This distribution could be:-

For this distribution, Talend supports:

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Standalone

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Yarn client

-

-

For this distribution, Talend supports:

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Standalone

-

Yarn client

-

Yarn cluster

-

-

For this distribution, Talend supports:

-

Yarn cluster

-

-

Cloudera Altus

For this distribution, Talend supports:-

Yarn cluster

Your Altus cluster should run on the following Cloud

providers:-

Azure

The support for Altus on Azure is a technical

preview feature. -

AWS

-

-

As a Job relies on Avro to move data among its components, it is recommended to set your

cluster to use Kryo to handle the Avro types. This not only helps avoid

this Avro known issue but also

brings inherent preformance gains. The Spark property to be set in your

cluster is:

1spark.serializer org.apache.spark.serializer.KryoSerializerIf you cannot find the distribution corresponding to yours from this

drop-down list, this means the distribution you want to connect to is not officially

supported by

Talend

. In this situation, you can select Custom, then select the Spark

version of the cluster to be connected and click the

[+] button to display the dialog box in which you can

alternatively:-

Select Import from existing

version to import an officially supported distribution as base

and then add other required jar files which the base distribution does not

provide. -

Select Import from zip to

import the configuration zip for the custom distribution to be used. This zip

file should contain the libraries of the different Hadoop/Spark elements and the

index file of these libraries.In

Talend

Exchange, members of

Talend

community have shared some ready-for-use configuration zip files

which you can download from this Hadoop configuration

list and directly use them in your connection accordingly. However, because of

the ongoing evolution of the different Hadoop-related projects, you might not be

able to find the configuration zip corresponding to your distribution from this

list; then it is recommended to use the Import from

existing version option to take an existing distribution as base

to add the jars required by your distribution.Note that custom versions are not officially supported by

Talend

.

Talend

and its community provide you with the opportunity to connect to

custom versions from the Studio but cannot guarantee that the configuration of

whichever version you choose will be easy. As such, you should only attempt to

set up such a connection if you have sufficient Hadoop and Spark experience to

handle any issues on your own.

For a step-by-step example about how to connect to a custom

distribution and share this connection, see Hortonworks.

Configuring the connection to the file system to be used by Spark

Skip this section if you are using Google Dataproc or HDInsight, as for these two

distributions, this connection is configured in the Spark

configuration tab.

-

Double-click tHDFSConfiguration to open its Component view.

Spark uses this component to connect to the HDFS system to which the jar

files dependent on the Job are transferred. -

If you have defined the HDFS connection metadata under the Hadoop

cluster node in Repository, select

Repository from the Property

type drop-down list and then click the

[…] button to select the HDFS connection you have

defined from the Repository content wizard.For further information about setting up a reusable

HDFS connection, search for centralizing HDFS metadata on Talend Help Center

(https://help.talend.com).If you complete this step, you can skip the following steps about configuring

tHDFSConfiguration because all the required fields

should have been filled automatically. -

In the Version area, select

the Hadoop distribution you need to connect to and its version. -

In the NameNode URI field,

enter the location of the machine hosting the NameNode service of the cluster.

If you are using WebHDFS, the location should be

webhdfs://masternode:portnumber; WebHDFS with SSL is not

supported yet. -

In the Username field, enter

the authentication information used to connect to the HDFS system to be used.

Note that the user name must be the same as you have put in the Spark configuration tab.