tSortRow

Helps creating metrics and

classification table.

tSortRow sorts input data based on one or several

columns, by sort type and order.

Depending on the Talend

product you are using, this component can be used in one, some or all of the following

Job frameworks:

-

Standard: see tSortRow Standard properties.

The component in this framework is available in all Talend

products. -

MapReduce: see tSortRow MapReduce properties (deprecated).

The component in this framework is available in all subscription-based Talend products with Big Data

and Talend Data Fabric. -

Spark Batch:

see tSortRow properties for Apache Spark Batch.The component in this framework is available in all subscription-based Talend products with Big Data

and Talend Data Fabric. -

Spark Streaming:

see tSortRow properties for Apache Spark Streaming.This component is available in Talend Real Time Big Data Platform and Talend Data Fabric.

tSortRow Standard properties

These properties are used to configure tSortRow running in the Standard Job framework.

The Standard

tSortRow component belongs to the Processing family.

The component in this framework is available in all Talend

products.

Basic settings

|

Schema and Edit |

A schema is a row description. It defines the number of fields Click Edit

Click Sync columns to retrieve This This |

|

|

Built-In: You create and store the schema locally for this component |

|

|

Repository: You have already created the schema and stored it in the |

|

Criteria |

Click + to add as many lines as required for the sort to be |

|

|

Schema column: Select the column |

|

|

Sort type: Numerical and |

|

|

Order: Ascending or descending |

Advanced settings

|

Sort on disk |

Customize the memory used to temporarily store output data.

Temp data directory path: Set the

Create temp data directory if not

Buffer size of external sort: Type |

|

tStatCatcher Statistics |

Select this check box to gather the Job processing metadata at the Job level as well as |

Global Variables

|

Global Variables |

ERROR_MESSAGE: the error message generated by the A Flow variable functions during the execution of a component while an After variable To fill up a field or expression with a variable, press Ctrl + For further information about variables, see |

Usage

|

Usage rule |

This component handles flow of data therefore it requires input |

Sorting entries

This scenario describes a three-component Job. A tRowGenerator is used to create random entries which are directly sent

to a tSortRow to be ordered following a defined value

entry. In this scenario, we suppose the input flow contains names of salespersons along

with their respective sales and their years of presence in the company. The result of

the sorting operation is displayed on the Run

console.

-

Drop the three components required for this use case: tRowGenerator, tSortRow and

tLogRow from the Palette to the design workspace. -

Connect them together using Row

main links. -

On the tRowGenerator editor, define the

values to be randomly used in the Sort component. For more information regarding

the use of this particular component, see tRowGenerator

-

In this scenario, we want to rank each salesperson according to its

Sales value and to its number of years in the

company. -

Double-click tSortRow to display the

Basic settings tab panel. Set the sort

priority on the Sales value and as secondary criteria, set the number of years

in the company.

-

Use the plus button to add the number of rows required. Set the type of

sorting, in this case, both criteria being integer, the sort is numerical. At

last, given that the output wanted is a rank classification, set the order as

descending. -

Display the Advanced Settings tab and select

the Sort on disk check box to modify the

temporary memory parameters. In the Temp data directory

path field, type the path to the directory where you want to

store the temporary data. In the Buffer size of external

sort field, set the maximum buffer value you want to allocate to

the processing.

The default buffer value is 1000000 but the more rows and/or columns you

process, the higher the value needs to be to prevent the Job from automatically

stopping. In that event, an “out of memory” error message displays.

-

Make sure you connected this flow to the output component, tLogRow, to display the result in the Job

console. -

Press F6 to run the Job. The ranking is based

first on the Sales value and then on the number of years of experience.

Sorting entries based on dynamic schema

This scenario applies only to subscription-based Talend products.

In this scenario we will sort entries in an input file based on a dynamic schema,

display the sorting result on the Run console, and save

the sorting result in an output file. For more information about the dynamic schema

feature, see

Talend Studio User

Guide.

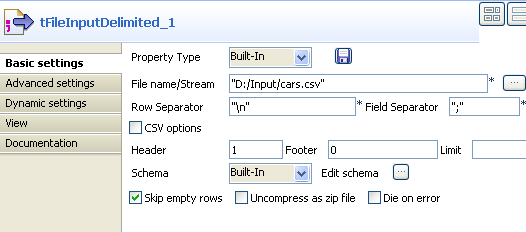

-

Drop the components required for this use case: tFileInputDelimited, tSortRow,

tLogRow and tFileOutputDelimited from the Palette to the design workspace. -

Connect these components together using Row

> Main links. -

Double-click the tFileInputDelimited

component to display its Basic settings view.

The dynamic schema feature is only supported in Built-In mode and requires the input file to have a

header row.

-

Select Built-In from the Property Type list.

-

Click the […] button next to the File Name field to browse to your input file. In this

use case, the input file cars.csv has five columns:

ID_Owner, Registration,

Make, Color, and

ID_Reseller. -

Specify the header row in Header field. In

this use case the first row is the header row. -

Select Built-In from the Schema list, and click Edit

schema to set the input schema.

The dynamic column must be defined in the last row of the

schema.

-

In the schema editor, add two columns and name them

ID_Owner and Other respectively.

Set the data type of the Other column to Dynamic to retrieve all the columns undefined in the

schema. -

Click OK to propagate the schema and close

the schema editor. -

Double-click tSortRow to display the

Basic settings view.

-

Add a row in the Criteria table by clicking

the plus button, select Other under Schema column, select alpha as the sorting

type, and select the ascending or

descending order for data output.

Dynamic column sorting works only when the sorting type is

set to alpha.

-

To view the output in the form of a table on the Run console, double-click the tLogRow component and select the Table option in the Basic

settings view. -

Double-click the tFileOutputDelimited

component to display its Basic Settings

view.

-

Click the […] button next to the File Name field to browse to the directory where you

want to save the output file, and then enter a name for the file. -

Select the Include Header check box to

retrieve the column names as well as the sorted data. -

Save your Job and press F6 to run it.

The sorting result is displayed on the Run

console and written into the output file.

tSortRow MapReduce properties (deprecated)

These properties are used to configure tSortRow running in the MapReduce Job framework.

The MapReduce

tSortRow component belongs to the Processing family.

The component in this framework is available in all subscription-based Talend products with Big Data

and Talend Data Fabric.

The MapReduce framework is deprecated from Talend 7.3 onwards. Use Talend Jobs for Apache Spark to accomplish your integration tasks.

Basic settings

|

Schema and Edit |

A schema is a row description. It defines the number of fields Click Edit

Click Sync columns to retrieve |

|

|

Built-In: You create and store the schema locally for this component |

|

|

Repository: You have already created the schema and stored it in the |

|

Criteria |

Click + to add as many lines as required for the sort to be |

|

|

Schema column: Select the column |

|

|

Sort type: Numerical and |

|

|

Order: Ascending or descending |

Global Variables

|

Global Variables |

ERROR_MESSAGE: the error message generated by the A Flow variable functions during the execution of a component while an After variable To fill up a field or expression with a variable, press Ctrl + For further information about variables, see |

Usage

|

Usage rule |

In a For further information about a For a scenario demonstrate a Map/Reduce Job using this component, Note that in this documentation, unless otherwise |

Related scenarios

No scenario is available for the Map/Reduce version of this component yet.

tSortRow properties for Apache Spark Batch

These properties are used to configure tSortRow running in the Spark Batch Job framework.

The Spark Batch

tSortRow component belongs to the Processing family.

The component in this framework is available in all subscription-based Talend products with Big Data

and Talend Data Fabric.

Basic settings

|

Schema and Edit |

A schema is a row description. It defines the number of fields Click Edit

Click Sync columns to retrieve |

|

|

Built-In: You create and store the schema locally for this component |

|

|

Repository: You have already created the schema and stored it in the |

|

Criteria |

Click + to add as many lines as required for the sort to be |

|

|

Schema column: Select the column |

|

|

Sort type: Numerical and |

|

|

Order: Ascending or descending |

Usage

|

Usage rule |

This component is used as an intermediate step. This component, along with the Spark Batch component Palette it belongs to, Note that in this documentation, unless otherwise explicitly stated, a |

|

Spark Connection |

In the Spark

Configuration tab in the Run view, define the connection to a given Spark cluster for the whole Job. In addition, since the Job expects its dependent jar files for execution, you must specify the directory in the file system to which these jar files are transferred so that Spark can access these files:

This connection is effective on a per-Job basis. |

Related scenarios

No scenario is available for the Spark Batch version of this component

yet.

tSortRow properties for Apache Spark Streaming

These properties are used to configure tSortRow running in the Spark Streaming Job framework.

The Spark Streaming

tSortRow component belongs to the Processing family.

This component is available in Talend Real Time Big Data Platform and Talend Data Fabric.

Basic settings

|

Schema and Edit |

A schema is a row description. It defines the number of fields Click Edit

Click Sync columns to retrieve |

|

|

Built-In: You create and store the schema locally for this component |

|

|

Repository: You have already created the schema and stored it in the |

|

Criteria |

Click + to add as many lines as required for the sort to be |

|

|

Schema column: Select the column |

|

|

Sort type: Numerical and |

|

|

Order: Ascending or descending |

Usage

|

Usage rule |

This component is used as an intermediate step. This component, along with the Spark Streaming component Palette it belongs to, appears Note that in this documentation, unless otherwise explicitly stated, a scenario presents |

|

Spark Connection |

In the Spark

Configuration tab in the Run view, define the connection to a given Spark cluster for the whole Job. In addition, since the Job expects its dependent jar files for execution, you must specify the directory in the file system to which these jar files are transferred so that Spark can access these files:

This connection is effective on a per-Job basis. |

Related scenarios

No scenario is available for the Spark Streaming version of this component

yet.